Share this

Using ML and AI in the Energy Sector (With Case Studies)

Explore machine learning and AI in the energy sector, including renewables, in this summary of Dr. Kyri Baker’s keynote from our annual Summit.

You can use artificial intelligence and machine learning to predict the optimal operation of the power grid. Plus, finding faster, more accurate methods for optimizing the power grid can help enable the increased penetration of renewables and flexible assets like batteries.

AI and ML Definitions

First, a few definitions. Artificial intelligence (AI) encompasses much more than machine learning (ML). AI encompasses anything that's artificial in intelligence, including rule-based systems and more trial-and-error aspects that aren't deep learning, which is what people typically talk about. ML is a subset of AI.

In machine learning, the key difference is that you don't explicitly tell the algorithm what to do. Although people tend to think of machine learning as being composed of neural networks (i.e., modeled on the human brain and nervous systems), that’s not always the case. For example,  linear regression, such as fitting a line to data points in Excel, is machine learning. Even though we tend to think of artificial intelligence as the more complex of the two, it's actually the other way around.

linear regression, such as fitting a line to data points in Excel, is machine learning. Even though we tend to think of artificial intelligence as the more complex of the two, it's actually the other way around.

Since computation power has increased dramatically in recent years, it's been much easier to train these massive deep-learning models to learn patterns within data. When it comes to energy systems, there's a correlation between how renewable energy technologies, smart grid technologies, and machine learning technologies are increasing simultaneously. So maybe it makes sense to combine these two in a way that revolutionizes our energy system.

AI and Energy Industry Changes

Let’s look at the factors involved as well as what’s going on in the market. Compute powers are increasing in availability as well as decreasing in cost. When neural networks – a set of algorithms  loosely modeled after the human brain that are designed to recognize patterns – first appeared decades ago, some were implemented in hardware because we didn't have enough software capability. In recent years, that's changed due to lower costs and more widespread availability of physical sensors, data storage, and data collection devices. Specifically, advances in GPU computing have facilitated deep-learning models, such as computer vision, computer graphics, and even gaming.

loosely modeled after the human brain that are designed to recognize patterns – first appeared decades ago, some were implemented in hardware because we didn't have enough software capability. In recent years, that's changed due to lower costs and more widespread availability of physical sensors, data storage, and data collection devices. Specifically, advances in GPU computing have facilitated deep-learning models, such as computer vision, computer graphics, and even gaming.

Many energy systems have a lot of data that we don’t know what to do with, such as the smart meters in our houses, which contain sensors that aren’t even used. And we have a lot of data that's just thrown away by Independent System Operators (ISOs) because they don't know what to do with that data. So let's think about how to use that data.

Case Study 1: Fast Grid Operations

Look at how the current grid and markets are operated, with renewable energy penetration introducing a lot of intermittency and variability.

How do we try to solve the power flow, balance, supply, and demand in real time? With our current simplified models operating on a five-minute scale, we're getting fluctuations that are significantly faster than five minutes. Let's think about how we can use data to speed up these grid operations.

This isn’t a trivial problem. We make a lot of approximations when we clear markets and determine locational marginal prices (LMPs). The power grid is possibly the most complex human-made system in existence, and we sometimes forget that we have to balance supply and demand at a subsecond level to avoid catastrophes. This is why the US National Academy of Engineering ranked electric power as the number one greatest engineering achievement of the 20th century.

However, infrastructure is aging, and extreme weather events are threatening the power grid. We’re getting outages like we've never seen before, stressing grid operators and changing how we deliver power to consumers. The paradigm used to be large-scale generation, with reliable transmission piping power down to consumers, leading to relatively predictable load patterns. Now we're getting dramatic increases in electric vehicles, flexible demand, and intermittent energy sources.

How can we operate the power grid more reliably? Let’s start by looking at how we currently optimize large-scale grids. When the market clears in PJM, that means they solved the security-constrained economic dispatch or security-constrained DC optimal power flow, which is an optimization problem. They then run an AC power flow to check that it was physically feasible. Often it isn’t, so they perform corrective actions. If there are voltage violations, then they perform an iterative process where they solve a set of optimization problems.

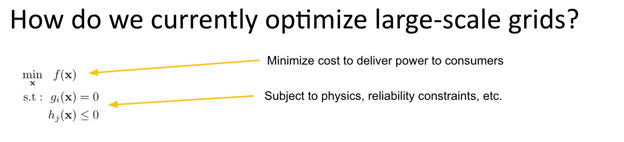

Inside our optimization, we have a cost function f(x). This is the generator's bid curve. g(x) is the power flow equation, ensuring that power into a bus equals power flowing out of a bus. Then we have constraints. We have inequality constraints, h(x), which are things like generator ramp limits, upper and lower capacity limits, etc.

The true problem is that highly nonlinear power flows in a complex way. To address this, power flow equations are linearized. This is the DC optimal power flow problem, which is what we use to calculate the five-minute time scale that we have. However, there's a suboptimality here. When we linearize the dynamics of the grid, we're not actually optimizing power delivery to customers – we're not turning on the right generators at the right level.

Since computers weren't great in the 1970s when the DC optimal power flow (DCOPF) problem really took off, we’re working with an outdated system. Studies show that billions of dollars are lost annually due to these suboptimalities. Some studies show we can cut about 500 million metric tons of carbon dioxide by improving global grid efficiencies.

Applying Machine Learning to the Problem

There have been many advancements in computing power, clean data, big data, and clean tech. Let's capitalize on the fact that these power grid operators repeatedly solve the same optimization problem with some minor changes every day for years, and let's train a machine learning algorithm to help them solve this problem.

Ideally, optimizing the grid should use the full fidelity, AC optimal power flow model, but this is hard to use for real-time operation. Current operational methods don’t capitalize on historical data.

This brings us back to the most simplified version of this AC power flow problem. If we were to include the true physics of the grid, you would see that it's very complicated. We have voltage magnitudes (typically ignored in DCOPF), sines, and cosines. If you expand this across a large power system, it's impossible to solve this problem within a fast enough time scale to balance supply and demand subseconds, even with current computing power.

The key takeaway is that it's complicated to solve an optimization problem. In the current paradigm, we use grid simulator software, which offers an initial guess for what the optimization solution is, using algorithms like Newton Raphson to iterate towards the optimal solution. From there, we check to see if the algorithm solves or doesn’t solve, we change parameters, and we try again.

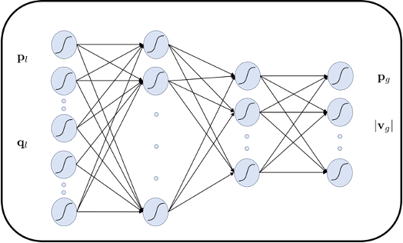

With unlimited time, we could run these grid simulators offline, generating a mapping between new loading scenarios or new weather scenarios and the optimal operation of generators. Then we’d train in neural networks to learn this complex mapping, so we didn't have to resolve the same complicated optimization problem every second. During what's called inference (the actual time we're predicting solutions), the new neural network would make a prediction, which would take a few milliseconds to spit out how the generator should operate. This leads to an important question: Can we obtain the solution to an optimization problem without actually solving one?

Machine Learning in the Energy Sector in Practice

Recently, I cohosted a webinar with seven ISOs and asked how they were using machine learning in practice. Many don't like the idea of replacing grid operations with the black box. Let’s explore how we can assist grid operators versus replacing them with machine learning.

Neural networks are what people talk about when they talk about deep learning. They’re modeled after human brains, where certain pathways activate when certain things happen. We can use neural networks to represent complicated relationships between variables. We can convexify or linearize the hard equations (e.g., the AC power flow equations) to solve these problems quickly, though convexifying generally causes lost information.

Although neural networks are still an approximation, they’re a good approximation – as you know if you’ve looked at ChatGPT or AI-generated images. This isn’t like the DCOPF approximation where we assume the lines are lossless. (See the high-level depiction in the image below).

Optimizing the Power Grid with AI and Machine Learning

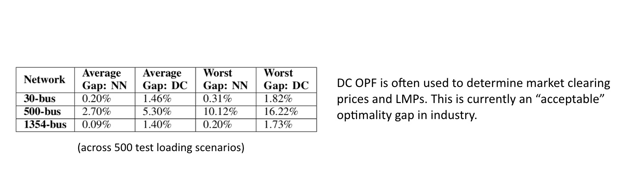

So far, we’ve explored what's called the optimality gap. If we were to optimize the system in the most efficient way with a neural network versus DCOPF (which is currently used to clear markets in many areas), what's the optimality gap? The results depend on the quality of the dataset. Without a comprehensive representation of scenarios and inputs, the model will perform poorly.

In the image below, we took a hypothetical 1,300 bus system, which could represent a geographical region of a state or multiple states, and ran 500 different scenarios across the grid. The average gap from DCOPF was 1.4%, which is quite a bit if you accumulate that in millions of dollars each year. The neural network can predict how the grid should optimally operate within a tenth of a percent, which is powerful.

However, do we have confidence if we used a neural network during Winter Storm Uri’s February 2021 Texas freeze? Most people wouldn’t care about 0.01% optimalities – they’re more interested in keeping the heat and lights on.

Case Study 2: Covid-19

How do we gain confidence? In the previous example, we showed good results where machine learning did a great job running a grid, but sometimes it doesn’t do a great job. During the COVID-19 pandemic, we had a forecasting problem, with many ISOs’ traditional forecasting algorithms trained on historical data. The unprecedented stay-at-home orders in the US changed everything.

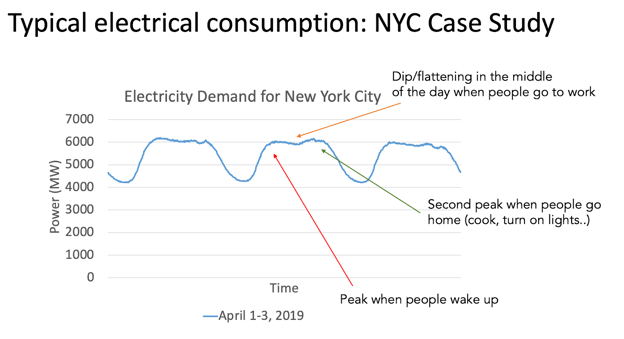

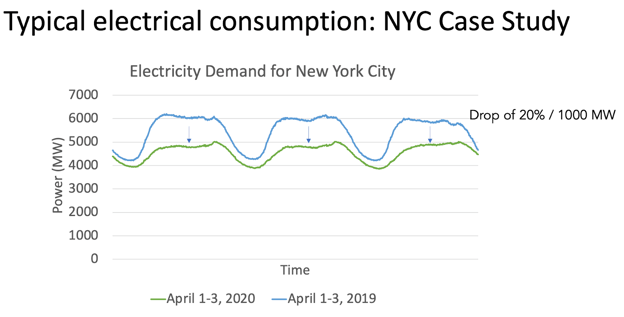

Residential, commercial, and industrial buildings consume a significant amount of electricity, and the stay-at-home orders changed where the electrons typically flow. The chart below highlights three days of pre-pandemic electricity demand in New York City. There’s a relatively predictable peak when people wake up, then a dip in the middle of the day when people are at work and more energy flows into commercial and office buildings rather than homes. There’s a second peak when people return home, with increased energy as people cook, turn on TVs, etc. And then another dip when people go to sleep. Overall, it was a predictable pattern in residential areas.

Data from NYISO’s data portal: https://www.nyiso.com/energy-market-operational-data)

We chose April 1 to 3 for both charts because these were all weekdays, with similar weather patterns.

Now let’s look at what happened in 2020. As you’ll see in the chart below, there was a huge dip in energy consumption due to restaurants and businesses shutting down. Since many businesses closed, the consumption of energy decreased 20%, with New York City dipping 1000 megawatts.

Data from NYISO’s data portal (https://www.nyiso.com/energy-market-operational-data)

The issue wasn’t a forecasting error or a huge change in load patterns. If you were to have run an ML algorithm to predict the load that day to know which generators to dispatch, you would have had severe over-generation problems. The stay-at-home orders weren’t in the machine-learning model; there’s no neural network I’ve seen for forecasting that includes a global pandemic input. For completely unforeseen situations such as the pandemic, we run into another set of issues.

Here lies the importance of data. We’ve used neural networks in forecasting for a long time. When I spoke with the seven ISO grid operators, they said that they feel more comfortable using machine learning for forecasting, since the neural net wouldn’t directly operate the system.

Traditional forecasting techniques may use limited inputs to forecast demand, such as the day of the week, temperature, or location. Deep learning, on the other hand, can have many more variables and uncover relationships that aren’t obvious to the human eye. It also includes automatic feature extraction, which means that we can throw a ton of data at a neural network and it's going to deemphasize the variables that aren't important.

However, it’s not a panacea – in this case of our pandemic stay-at-home orders, ML would have failed too. Thankfully the forecast in our example was an overestimate, not an underestimate, which can cause widespread blackouts.

Reduce Power Grid Emissions with a Software Upgrade

Power grid operators have to learn that software isn’t something to take lightly. It’s important to understand that you can reduce grid emissions and grid cost with a software upgrade.

If we change the way that we dispatch generators, including the way we plan and forecast, we can heavily mitigate carbon emissions, reduce costs for consumers, and help improve the ability to meet future demand.

Learn about Dr. Kyri Baker’s work, and connect with her. Delve further into this topic with her latest blog, Using AI and Machine Learning for Power Grid Optimization.

At Yes Energy®, our teams are comprised of experts entirely focused on power market data. That’s all we do. We understand the complexity and unique challenges of nodal power markets, and it’s why we’re committed to offering superior data to meet your needs. Learn how we can help you Win the Day Ahead™ with Better Data, Better Delivery, and Better Direction.

Share this

- Industry News & Trends (122)

- Power Traders (86)

- Asset Managers (44)

- Asset Developers (35)

- ERCOT (35)

- Infrastructure Insights Dataset (35)

- Data, Digital Transformation & Data Journey (33)

- PowerSignals (30)

- Utilities (27)

- Market Events (26)

- Yes Energy Demand Forecasts (26)

- Market Driver Alerts - Live Power (25)

- DataSignals (24)

- Live Power (23)

- Renewable Energy (19)

- Risk Management (18)

- Data Scientists (17)

- Energy Storage / Battery Technology (17)

- ISO Changes & Expansion (17)

- CAISO (15)

- EnCompass (15)

- PJM (15)

- QuickSignals (12)

- SPP (10)

- MISO (9)

- Position Management (9)

- Power Markets 101 (9)

- Submission Services (8)

- Data Centers (7)

- Financial Transmission Rights (7)

- Demand Forecasts (6)

- Snowflake (6)

- FTR Positions Dataset (5)

- Powered by Yes Energy (5)

- Asset Developers/Managers (4)

- Geo Data (4)

- ISO-NE (4)

- Solutions Developers (4)

- AI and Machine Learning (3)

- Battery Operators (3)

- Commercial Vendors (3)

- GridSite (3)

- IESO (3)

- Independent Power Producers (3)

- NYISO (3)

- Natural Gas (3)

- data quality (3)

- Canada (2)

- Europe (2)

- Japanese Power Markets (2)

- PeopleOps (2)

- Crypto Mining (1)

- FERC (1)

- Ireland (1)

- PowerCore (1)

- Western Markets (1)

- hydro storage (1)

- nuclear power (1)

- November 2025 (3)

- October 2025 (7)

- August 2025 (4)

- July 2025 (6)

- June 2025 (5)

- May 2025 (5)

- April 2025 (10)

- March 2025 (6)

- February 2025 (11)

- January 2025 (7)

- December 2024 (4)

- November 2024 (7)

- October 2024 (6)

- September 2024 (5)

- August 2024 (9)

- July 2024 (9)

- June 2024 (4)

- May 2024 (7)

- April 2024 (6)

- March 2024 (4)

- February 2024 (8)

- January 2024 (5)

- December 2023 (4)

- November 2023 (6)

- October 2023 (8)

- September 2023 (1)

- August 2023 (3)

- July 2023 (3)

- May 2023 (4)

- April 2023 (2)

- March 2023 (1)

- February 2023 (2)

- January 2023 (3)

- December 2022 (2)

- November 2022 (1)

- October 2022 (3)

- September 2022 (5)

- August 2022 (4)

- July 2022 (3)

- June 2022 (2)

- May 2022 (1)

- April 2022 (2)

- March 2022 (3)

- February 2022 (6)

- January 2022 (2)

- November 2021 (2)

- October 2021 (4)

- September 2021 (1)

- August 2021 (1)

- July 2021 (1)

- June 2021 (2)

- May 2021 (3)

- April 2021 (2)

- March 2021 (3)

- February 2021 (2)

- December 2020 (3)

- November 2020 (4)

- October 2020 (2)

- September 2020 (3)

- August 2020 (2)

- July 2020 (2)

- June 2020 (1)

- May 2020 (8)

- November 2019 (1)

- August 2019 (2)

- June 2019 (1)

- May 2019 (2)

- January 2019 (1)